Learn to talk about the limitations of technology in 6 minutes! - YouTube

Catherine: Hello and welcome to 6 Minute

English. I'm Catherine.

Rob: And hello, I'm Rob.

Catherine: Today we have another

technology topic.

Rob: Oh good! I love technology. It makes

things easier, it's fast and means I can

have gadgets.

Catherine: Do you think that technology

can actually do things better than humans?

Rob: For some things, yes. I think cars

that drive themselves will be safer than

humans but that will take away some of

the pleasure of driving. So I guess it

depends on what you mean by better.

Catherine: Good point, Rob. And that

actually ties in very closely with today's

topic which is technochauvinism.

Rob: What's that?

Catherine: We'll find out shortly, Rob, but

before we do, today's quiz question.

Artificial Intelligence, or A.I., is an area of

computer science that develops the

ability of computers to learn to do things

like solve problems or drive cars without

crashing. But in what decade was the

term 'Artificial Intelligence'

coined? Was it: a) the 1940s, b) the 1950s

or c) the 1960s?

Rob: I think it's quite a new expression so

I'll go for c) the 1960s.

Catherine: Good luck with that, Rob, and

we'll give you the answer later in the

programme. Now, let's get back to our

topic of technochauvinism.

Rob: I know what a chauvinist is. It's

someone who thinks that their country or

race or sex is better than others. But how

does this relate to technology?

Catherine: We're about to find out.

Meredith Broussard is Professor of

Journalism at New York University and

she's written a book called

Artificial Unintelligence. She appeared on

the BBC Radio 4 programme More or Less

to talk about it. Listen carefully and find

out her definition of technochauvinism.

Meredith Broussard: Technochauvinism is

the idea that technology is always the

highest and best solution. So somehow

over the past couple of decades we got

into the habit of

thinking that doing something with a

computer is always the best and most

objective way to do something and

that's simply not true.

Computers are not objective, they are

proxies for the people who make them.

Catherine: What is Meredith Broussard's

definition of technochauvinism?

Rob: It's this idea that using technology

is better than not using technology.

Catherine: She says that we have this idea

that a computer is objective. Something

that is objective is neutral, it doesn't have

an opinion, it's fair and it's unbiased - so

it's the opposite of being a chauvinist. But

Meredith Broussard says this is not true.

Rob: She argues that computers are not

objective. They are proxies for the people

that make them. You might know the

word proxy when you are using your

computer in one country and want to look

at something that is only available

in a different country. You can use a piece

of software called a proxy to do that.

Catherine: But a proxy is also a person or

a thing that carries out your wishes and

your instructions for you.

So computers are only as smart or as

objective as the people that

programme them. Computers are proxies

for their programmers. Broussard says

that believing too much in Artificial

Intelligence can make the world worse.

Let's hear a bit more. This time find out

what serious problems in society

does she think may be reflected in AI?

Meredith Broussard: It's a nuanced

problem. What we have is data on the

world as it is and we have serious

problems with racism, sexism, classism, ageism, in the world right now so there is

no such thing as perfect data. We also

have a problem inside the tech world

where the creators of algorithms do not

have sufficient awareness of social

issues such that they can make good

technology that gets us closer to a world

as it should be.

Rob: She said that society has problems

with racism, sexism, classism and ageism.

Catherine: And she says it's a nuanced

problem. A nuanced problem is not

simple, but it does have small and

important areas which may be

hard to spot, but they need to be considered.

Rob: And she also talked about

algorithms used to program these

technological systems.

An algorithm is a set of instructions that

computers use to perform their tasks.

Essentially it's the rules that they use

to come up with their answers and

Broussard believes that technology will

reflect the views of those who create the algorithms.

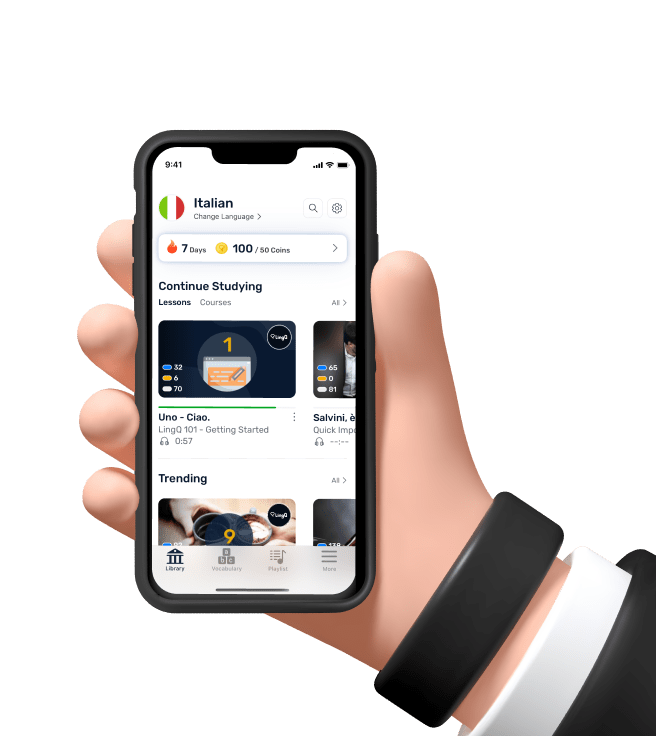

Catherine: Next time you're using a piece

of software or your favourite app, you

might find yourself wondering if it's a

useful tool or does it contain these little

nuances that

reflect the views of the developer.

Rob: Right, Catherine. How about the

answer to this week's question then?

Catherine: I asked in which decade was

the term 'Artificial Intelligence' coined.

Was it the 40s, the 50s or the 60s?

Rob: And I said the 60s.

Catherine: But it was actually the 1950s.

Never mind, Rob. Let's review today's vocabulary.

Rob: Well, we had a chauvinist - that's

someone who believes their country, race

or sex is better than any others.

Catherine: And this gives us

technochauvinism,

the belief that a technological solution is

always a better solution to a problem.

Rob: Next - someone or something that is

objective is neutral, fair and balanced.

Catherine: A proxy is a piece of software

but also someone who does something

for you, on your behalf.

A nuanced problem is a subtle

one, it's not a simple case of right or

wrong, in a nuanced problem there are

small but important things that you need

to consider.

Rob: And an algorithm is a set of software

instructions for a computer system.

Catherine: Well, that's all we have time for

today. Goodbye for now.

Rob: Bye bye!