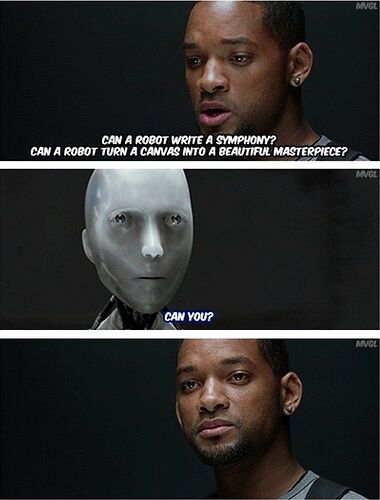

With all due respect to the author, this comparison with human “true” intelligence seems kinda lame to me. And some nonesense in the article, really. Like this one:

When linguists seek to develop a theory for why a given language works as it does (“Why are these — but not those — sentences considered grammatical?”), they are building consciously and laboriously an explicit version of the grammar that the child builds instinctively and with minimal exposure to information. The child’s operating system is completely different from that of a machine learning program.

I don’t even want to comment on this one.

Indeed, such programs are stuck in a prehuman or nonhuman phase of cognitive evolution. Their deepest flaw is the absence of the most critical capacity of any intelligence: to say not only what is the case, what was the case and what will be the case — that’s description and prediction — but also what is not the case and what could and could not be the case. Those are the ingredients of explanation, the mark of true intelligence.

Chomsky probably has an incredibly intelligent (on average) circle of people around him. 95% of the population can do nothing of these. Or at best some of it. And only in a limited number of subjects.

But an explanation is something more: It includes not only descriptions and predictions but also counterfactual conjectures like “Any such object would fall,” plus the additional clause “because of the force of gravity” or “because of the curvature of space-time” or whatever. That is a causal explanation: “The apple would not have fallen but for the force of gravity.” That is thinking.

This is the matter of data sets. We have the luxury of multimodal data sets to prove or disprove any magical thinking theories to adjust our knowledge of the world. And yet so many of people in their adult lives believe in horoscopes and overall prone to magical thinking, no matter what. So, the point isn’t taken.

Whereas humans are limited in the kinds of explanations we can rationally conjecture, machine learning systems can learn both that the earth is flat and that the earth is round. They trade merely in probabilities that change over time.

Humans believe in both science and in God. And even flat-earthers believe in science, but deny some parts of it to fit it to their beloved theory. In general, people have a lot of messed up and conflicting beliefs, preconceived notions in their minds.

It’s again the matter of data sets. We can teach our brother human to be like this and to believe in opposite things. And vice versa, we can train a language model only on a scientifically proven data set.

Language models were never supposed to be AI in the general sense, anyway.

Human intelligence is overrated, very limited and ultimately finite. All we live off the successes of a few geniuses throughout the history, layered on top of each other, short moments of when these great people came up with something genius.